HPE, Nvidia partner on scalable hardware platform for AI development – Business

Hewlett Packard Enterprise Co. is the latest systems maker to join the Nvidia Corp. express with today’s announcement of a lineup of co-developed systems optimized for artificial intelligence and joint sales and marketing integrations aimed at generative AI.

During its HPE Discover conference starting today in Las Vegas, the company said the packaged systems can boost productivity for AI engineers, data scientists and operations teams by up to 90%.

HPE Private Cloud AI features deep integration between Nvidia AI computing, networking and software with HPE’s AI-specific storage and computing products offered via the GreenLake cloud. GreenLake is a portfolio of cloud and as-a-service options that are intended to deliver cloud-like capabilities regardless of information technology infrastructure, with pay-as-you-go pricing.

The offering is powered by a new OpsRamp AI copilot for IT operations and comes in four configurations to support various AI workloads.

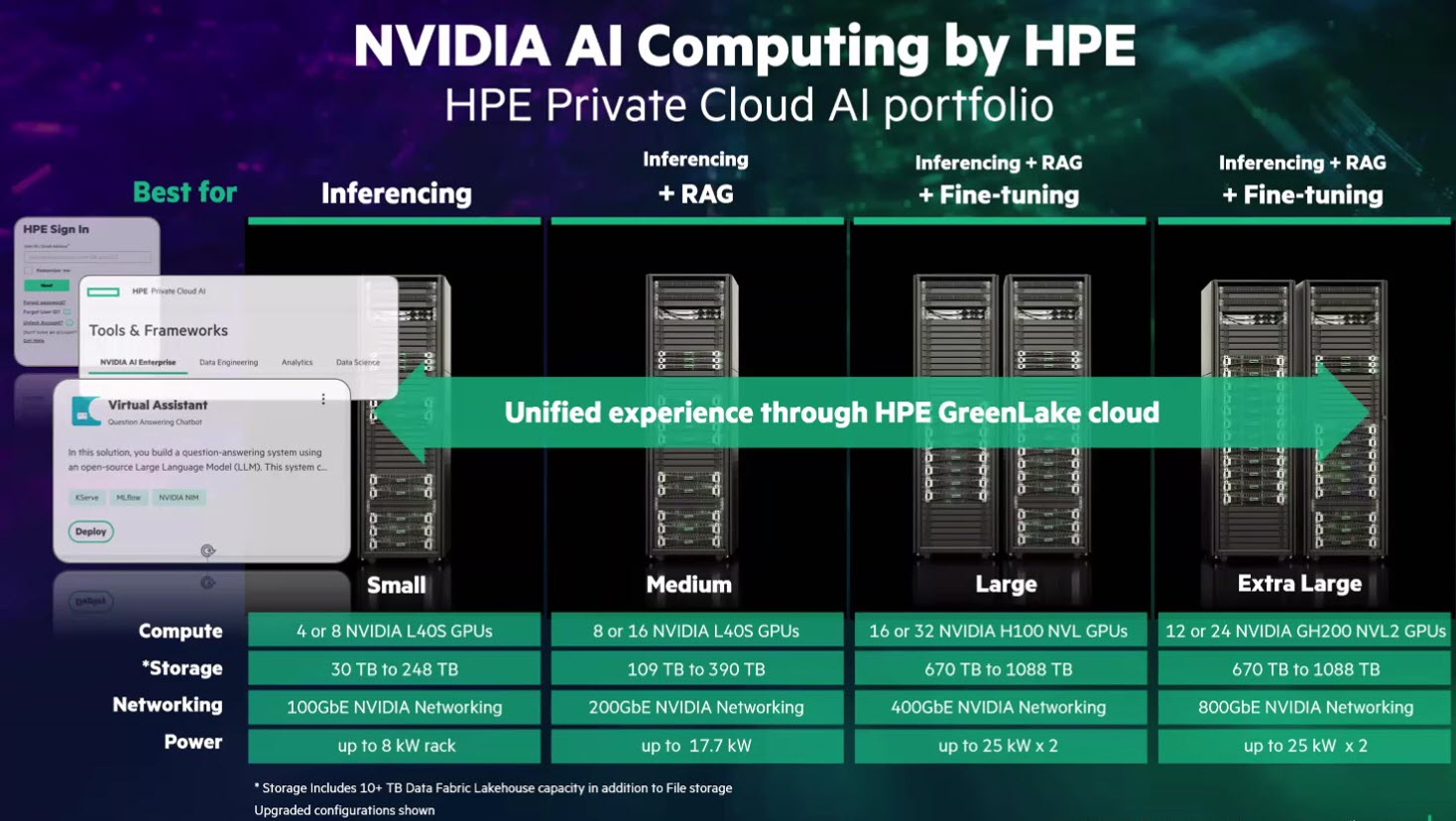

HPE Private Cloud AI supports inferencing, fine-tuning and retrieval-automated generation workloads using proprietary data. It combines data privacy, security, transparency and governance controls with ITOps and AIOps capabilities.

AIOps uses machine learning and data analytics to automate and improve IT operations tasks. ITOps encompasses a range of activities that ensure the smooth functioning of an organization’s IT infrastructure.

Built on Nvidia AI Enterprise

The foundation of the AI and data software stack is the Nvidia AI Enterprise software platform and inference microservices. Nvidia AI Enterprise is built to develop and deploy copilots and other generative AI applications quickly. NIM is a set of microservices for optimized AI model inferencing.

HPE AI Essentials software is a set of curated AI and data foundation tools with a unified control plane that is adaptable to various uses. It includes enterprise support and AI services such as data and model compliance, with extensible features that ensure AI pipelines are in compliance, explainable and reproducible, HPE said.

“It’s ready to boot up,” said Fidelma Russo, HPE’s chief technology officer. “You plug it in, connect to the cloud, and you’re up and running three clicks later.”

HPE promotes the package as an on-premises alternative to AI model training and RAG in the cloud. “Our target market is enterprises who want to move to AI and realize that running those models in the cloud will crush their budgets,” said Neil MacDonald, executive vice president and general manager of HPE’s high-performance computing and AI business.

Russo said deploying AI inferencing on-premises “is four to five times more cost-effective for inferencing workloads while keeping your data private.”

The infrastructure stack includes Nvidia Spectrum-X Ethernet networking, HPE GreenLake File Storage, and HPE ProLiant servers with support for Nvidia L40S, Nvidia H100 NVL Tensor Core graphic processing units and the Nvidia GH200 NVL2 rack-scale platform.

Four configurations

The offering comes in four configurations based on ProLiant processors ranging from small to extra large. Each is modular and expandable.

- The small configuration includes 428 Nvidia L40S GPUs, up to 248 terabytes of storage and 100-gigabit Ethernet networking in an 8-kilowatt rack.

- The midsize configuration comes with up to 16 L40S GPUs, the maximum 390 terabytes of storage and 200-gigabit Ethernet networking in a 17.7-kilowatt rack.

- The large model features up to 32 Nvidia H100 NVL GPUs, up to 1.1 petabytes of storage and 400-gigabit Ethernet networking in a twin rack configuration at up to 25 kilowatts each.

- The extra-large model combines up to 24 Gh200 NVL2 GPUs, a maximum of 1.1 petabytes of storage and 800-gigabit Ethernet networking in a twin 25-kilowatt rack configuration.

HPE said it intends to support Nvidia’s GB200 NVL72/NVL2 and the new Nvidia Blackwell, Rubin and Vera architectures.

Greenlake provides management and observability services for endpoints, workloads and data across hybrid environments. OpsRamp provides observability for the full Nvidia accelerated computing stack, including NIM and AI software, Nvidia Tensor Core GPUs, AI clusters and Nvidia Quantum InfiniBand and Spectrum Ethernet switches.

The new OpsRamp operations copilot utilizes Nvidia’s computing platform to analyze large datasets for insights with a conversational assistant. OpsRamp will also integrate with CrowdStrike Inc. application program interfaces to provide a unified service map view of endpoint security across infrastructure and applications.

HPE and Nvidia will both sell the server packages along with service providers Deloitte LLP, HCL Technologies Ltd., Infosys Ltd., Tata Consultancy Service Ltd. and Wipro Ltd.

Targeting AI energy costs

Separately, HPE and engineering giant Danfoss A/S announced a collaboration to deliver an off-the-shelf heat recovery module as part of a package to help organizations developing AI models reduce the heat generated by IT equipment.

The offering combines HPE’s scalable Modular Data Center in the form of small, high-density containers that can be deployed nearly anywhere and incorporates such technologies as direct liquid cooling to reduce overall energy consumption by 20%. Danfoss will provide heat reuse modules that capture excess heat from data centers and direct it to onsite and neighboring buildings and industries. Danfoss will also provide oil-free compressors that improve data center cooling efficiency by up to 30%.