Galileo debuts Protect hallucination firewall as LLM accuracy comes into sharper focus – Business

Startup Galileo Technologies Inc. today debuted a new software tool, Protect, that promises to block harmful artificial intelligence inputs and outputs.

The company describes the product as a real-time hallucination firewall. It’s the latest in a series of newly launched software tools designed to help companies block their AI models from generating inaccurate responses. Some of those tools were created by AI startups such as Galileo, while others are offered by large players from other parts of the enterprise technology market.

“The rapid adoption of AI has introduced a new set of safety and compliance risks that need to be managed by enterprises,” said Galileo co-founder and Chief Technology Officer Atin Sanyal. “Organizations are struggling to safeguard AI without impacting user experience.”

San Francisco-based Galileo is backed by more than $20 million in funding from Battery Ventures and other investors. Protect, its new AI firewall, promises to filter malicious large language inputs with millisecond latency. That means companies can block cyberattacks without noticeably slowing down the speed at which their LLMs answer users’ questions.

Many malicious prompts are designed to trick the targeted LLM into performing an action it wasn’t intended to take. Often, that action involves disclosing sensitive information. A hacker might, for example, attempt to craft a prompt that causes an LLM to reveal records from its training dataset.

Filtering malicious prompts is only one way to block such cyberattacks. Another is to detect when an LLM produces a response that may have been generated in response to a malicious input and overwrite it. According to Galileo, its Protect tool supports the latter use cases as well.

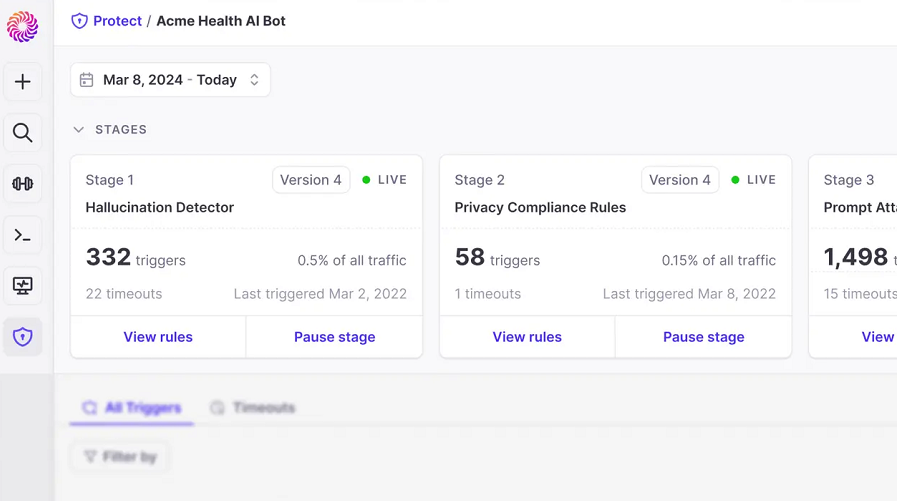

Customers can create rules that specify the conditions under which the tool should overwrite an LLM’s output. Besides filtering prompt answers that contain sensitive data, developers can create rules to detect and filter hallucinations, or inaccurate AI responses. Galileo says that it’s also possible to address more subtle issues, such as cases when the style of an LLM response diverges from a company’s internal marketing guidelines.

The tool integrates with two of the software maker’s existing AI products. The first, Observe, alerts administrators whenever an LLM breaches an output filtering rule configured in Protect. The other is called Evaluate and can help AI teams troubleshoot the root cause of erroneous prompt responses.

Galileo is one of several startups working to help enterprises avoid inaccurate LLM output.

Last month, Stardog Union launched Voicebox, an AI platform that promises to let workers query business data using natural language prompts without the risk of hallucinations. The company also introduced an on-premises appliance that allows organizations to host Voicebox in their own data centers. Pleasanton, California-based, Gleen, meanwhile, has built a specialized AI data management tool to filter inaccurate LLM outputs.

Larger market players are also joining the fray. A few weeks ago, Cloudflare Inc. debuted Firewall for AI, a cybersecurity tool that can prevent LLMs from disclosing sensitive data in response to malicious prompts. Google LLC, in turn, recently detailed an internally-developed framework that can be used to mitigate AI hallucinations.