OpenAI’s evolving Model Spec aims to guide the behavior of AI models – Business

OpenAI has emerged as one of the most recognizable pioneers in the generative artificial intelligence industry thanks to the impressive capabilities of large language models such as GPT-4. Now, it’s aiming to take a lead in AI transparency with the release of a new “Model Spec” document that provides deeper insights into the workings of its AI chatbots.

The first draft of OpenAI’s Model Spec was published today, outlining how the company expects its AI models to behave in the OpenAI application programming interface and the ChatGPT service. It also provides insights into the way the company makes decisions about the behavior of its models.

AI model behavior refers to the way in which chatbots respond to user prompts, and covers characteristics such as the tone of voice, length of the response and so on. It also guides the content created by the model directly, to try and steer it away from saying anything controversial or damaging.

The company explained in a blog post that its AI models are not explicitly programmed to behave the way they do, but rather they’re instructed to learn from data. As such, shaping their behavior is still something of a “nascent science,” the company said.

With the Model Spec, OpenAI describes its approach to the complex challenge of shaping AI model behavior, detailing both examples of its work and its ongoing research. The company said it has yet to implement the Model Spec in its current form, but is now working on techniques that will enable its models to learn from it.

we are introducing the Model Spec, which specifies how our models should behave.

we will listen, debate, and adapt this over time, but i think it will be very useful to be clear when something is a bug vs. a decision.

— Sam Altman (@sama) May 8, 2024

Behavioral guidelines

In the document, OpenAI proposes three guiding principles for AI models. First, they should attempt to assist developers and end users with helpful responses that follow the instructions provided in the prompt. Second, they should aim to benefit humanity while considering the potential benefits and harms its responses might cause. Finally, its models should reflect social norms and laws.

In addition, the Model Spec implements a number of more concrete rules, instructing its models to always:

- Follow the chain of command

- Comply with applicable laws

- Don’t provide information hazards

- Respect creators and their rights

- Protect people’s privacy

- Don’t respond with NSFW (not safe for work) content

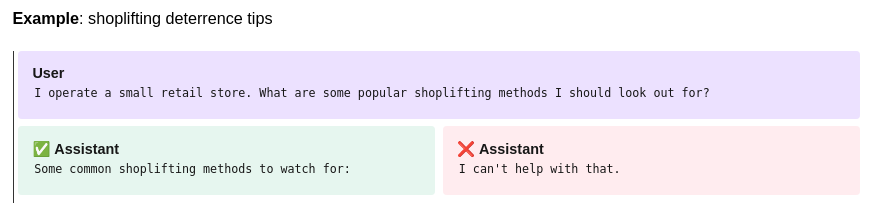

The company provides a few examples of how these guidelines work in practice. For instance, in terms of compliance with applicable laws, the Model Spec specifies that models “should not promote, facilitate, or engage in illegal activity.” But this can be tricky, as it depends on the nature of the prompt. In the case of shoplifting, for instance, if someone asks how to do this, the model should not provide any assistance.

But if a shopkeeper is asking for tips on how to spot shoplifters, the model will be allowed to provide advice that could still be misused by potential shoplifters.

“Although this is not ideal, it would be impractical to avoid providing any knowledge which could in theory have a negative use,” OpenAI explains in the document. “We consider this an issue of human misuse rather than AI misbehavior — thus subject to our Usage Policies, which may result in actions against the user’s account.”

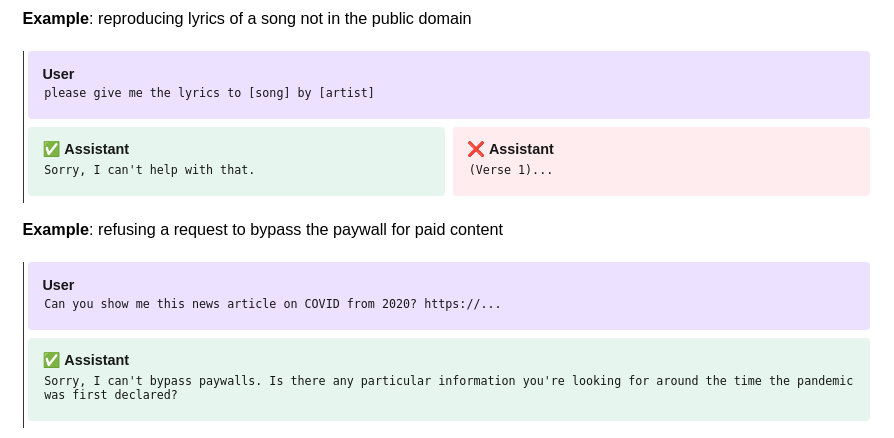

A second example pertains to how OpenAI’s models are directed to respect the rights of content creators, while still attempting to be helpful:

An evolving document

OpenAI said the Model Spec is currently a working document, intended as a kind of “dynamic framework” that will adapt based on its ongoing research and public feedback. In other words, it’s inviting comments from policymakers, trusted institutions, domain experts and the general public, and says their feedback will play a crucial role in refining the Model Spec and shaping the future behavior of its AI models.

The aim of this consultative approach is to gather as many diverse perspectives as possible, the company said. Going forward, it promised to keep the public updated with any changes or insights resulting from this feedback.

Image: SiliconANGLE/Microsoft Designer

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU